The Shared Prompt Review

Stop AI from flattening team thinking

Now accepting speeches, keynotes and assignments for 2026

I work with organisations and leadership teams on SuperSkills, human capability, and AI-era decision-making through speeches, workshops, keynotes and advisory work. In person or virtual. UK, Europe, global. → Book a discovery call | → Explore: thesuperskills.com

In addition to this newsletter, I recommend a few others. All free. Check them out here.

Friends,

Most organisations are trying to solve the wrong problem. Research suggests generative AI boosts creativity for only a minority of employees, while for most, it changes very little. The common response has been predictable: more training, better prompts, clearer guidance. That response misses the point. Completely.

The real issue is not how well individuals use these tools. It is that most organisations are not designed to make thinking visible, shared, or discussable. AI simply exposes that weakness and accelerates its effects. When thinking becomes private, and output becomes public, leaders lose the ability to lead.

One small change in practice does more to address this than most programmes I have seen. I call it the Shared Prompt Review.

TL;DR: make prompts, edits, and judgement visible once a week, and you will learn more than from another training deck. If you run one, reply and tell me what you saw. I’m collecting what teams are learning.

The transition failure we are living through

Something subtle has shifted in how people work, and most organisations have not noticed it yet: Many employees have moved from thinking about the problem to thinking into their keyboard. The cognitive effort that once went into framing, exploring, and sitting with uncertainty now goes into crafting a prompt that feels likely to return something useful. In effect, parts of the brain have been outsourced before teams have agreed how that outsourcing should work.

This is not a moral failure. Prompting is still new in everyday work. There are no shared norms, no settled workflows, and very little collective language for what “good” looks like. People are improvising.

As a result, important things get lost.

Assumptions disappear into prompts. Trade-offs never surface. Context lives in one person’s head and never reaches the room. Sometimes people do not even mention whether they used AI at all, because there is no clear expectation that they should or shouldn’t. Some are locked into Copilot. Others are using Grok on their private “burner phones”.

Different people develop different workflows. Some use AI early, some late, some constantly, some not at all. What the team receives is output, not process. Decisions get made on the surface, without access to the reasoning beneath.

Teams are no longer thinking together. They are receiving results separately.

The Shared Prompt Review exists for this moment of transition. It is a temporary but necessary scaffold while organisations move from private experimentation to shared judgement.

The problem that is hiding in plain sight

When people work with AI in private, the most important part of the work disappears.

The prompt is invisible. The assumptions embedded in it go unspoken. The reasons for accepting or rejecting an output never surface. What the team sees is a polished artefact that looks confident and complete, which pushes discussion away from thinking and towards taste. Agreement comes quickly. Learning rarely follows.

Most teams are good at reviewing what was produced. Very few review how it was produced, and that gap becomes expensive once AI enters everyday work. The Shared Prompt Review closes it.

What a Shared Prompt Review is

A Shared Prompt Review is a short, structured conversation in which a team examines how AI was used, not just what it produced.

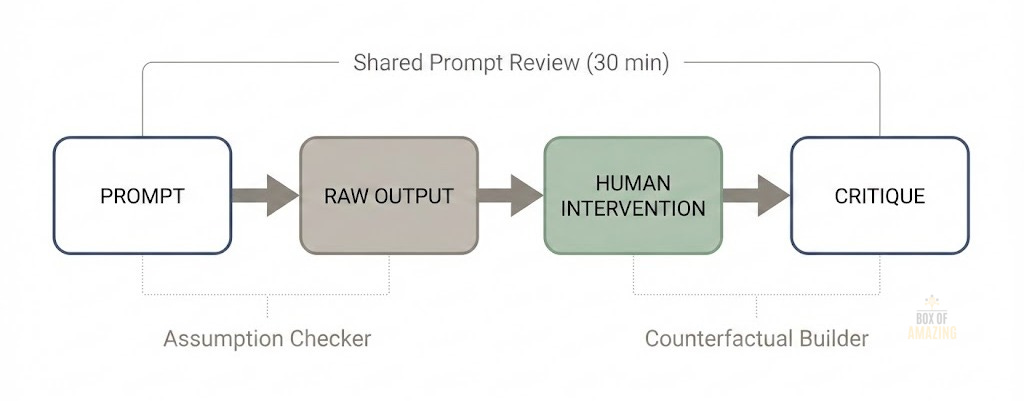

It has four elements:

1. The prompt: The exact prompt that was used, without polishing or retrospective rewriting.

2. The raw output: What the system actually returned before editing.

3. The human intervention: What was kept, what was cut, what was changed, and the reasoning behind those decisions.

4. The critique: Where the output might be wrong, narrow, or misleading, and one alternative direction the team chose not to pursue.

That is the whole structure. No slides. No theory. No prompt-engineering lecture. Just real work, laid bare.

Think of it as a design review for thinking itself. Or, more simply, judgement engineering. When tools can generate answers instantly, the real work shifts to deciding what deserves belief, attention, and action.

How it runs (30 minutes)

This works best in groups of five to eight.

One person brings a real, recent piece of work where AI played a role and walks the group through the prompt and the raw output. They then explain the edits they made and the decisions they took.

Two roles are made explicit during the discussion.

The assumption checker surfaces what the prompt quietly assumed about the problem, the user, or the context.

The counterfactual builder proposes a materially different direction the team could have taken but did not.

The remaining time is open discussion. The rule is simple and non-negotiable: the group does not debate whether the output is “good”. The focus stays on how judgement was applied.

This is not about catching mistakes. It is about making reasoning legible. It’s about learning and growing as a team.

This will feel uncomfortable at the start. You are asking people to show unfinished thinking in systems built to reward certainty. If you persist with it over time, even for a year, the payoff is substantial: fewer false starts, sharper decisions, and a team that develops real judgement rather than rehearsed competence.

A brief before-and-after example

A product team piloted Shared Prompt Reviews during a pricing sprint.

Before the change, AI-assisted proposals went straight into slides. Most received one or two late revisions, and decision-making was dominated by the most senior voices in the room.

After two Shared Prompt Reviews, every proposal documented at least three explicit assumptions. The average number of revisions increased from two to five before exec review, and one major decision shifted from tweaking pricing tiers to rethinking feature packaging, avoiding a month of downstream rework.

Nothing slowed down. Waste simply moved earlier, where it was cheaper and easier to fix.

Why this works when training does not

Most training focuses on individual capability. The Shared Prompt Review builds collective judgement instead.

As teams adopt it, metacognition becomes social rather than private. People learn how others think by watching real decisions being made. Default acceptance becomes visible and therefore discussable, while less experienced colleagues gain a way into the conversation by observing how strong thinkers interrogate weak answers.

This is not unusual or indulgent. It’s the same logic as a post-incident review or a project retrospective, applied to thinking rather than execution. A short pause, once work has taken shape, to understand how decisions were made. Done properly, it takes no more than thirty minutes and saves far more time than it costs.

This is why the ritual matters. It redistributes judgement, not just information.

A failure mode to watch for

One caution is worth stating clearly. If teams only bring polished, impressive prompts to look competent, the ritual loses its value. The most useful reviews involve messy prompts and heavily edited outputs, because that is where human judgement did the real work. When everything looks perfect, the team is performing competence rather than building it.

How to pilot this next week

This is a working world in transition. You do not need permission or budget.

Run a one-week pilot with one review per team, one real artefact, and a strict thirty-minute limit. Track four measures.

Percentage of artefacts with at least three explicit assumptions documented

Number of meaningful revisions made before leadership or client review

Spread of participation in critique, especially from quieter team members

Frequency with which the final direction differs meaningfully from the initial output

If two or more of these move, the ritual is doing its job.

This does not replace training. It makes training stick.

Where this is less useful

Shared Prompt Reviews are unnecessary for one-off tactical tasks or tightly regulated work where outputs are largely predetermined. They earn their keep where judgement, synthesis, and trade-offs matter, which is precisely where AI quietly reshapes who gets to think.

Why this is bigger than a team habit

AI is already redistributing cognitive authority inside organisations.

When prompts stay private and outputs arrive polished, strategic judgement concentrates in a few hands while everyone else becomes faster at implementation. That is how organisations drift into a two-tier cognitive system without ever choosing it. Left unattended, this is not a tooling problem. It is a leadership failure. The Shared Prompt Review is not a silver bullet. It is something more durable: an operational defence against cognitive stratification that keeps the how of thinking collective rather than hidden.

If you lead a team, run one Shared Prompt Review next week. You will see how your organisation really thinks together, and that is the part no training deck ever reveals.

Stay Curious - and don’t forget to be amazing,

PS. If your organisation is grappling with how AI is reshaping work and leadership, I speak and advise on this. Get in touch.

Rahim Hirji Author, SuperSkills (2026) | Keynote Speaker | Advisor

Building human capability for the AI era.

Tools I Use:

Jamie: AI Note taker without a bot

Refind: AI-curated Brain Food delivered daily

Meco: Newsletter reader outside your inbox

Prompt Cowboy: Prompt Generator

Manus: Best AI agent to do things for you

Recommend Reading:

Now

My Year in Review - As regular readers know, I sit with my family every Jan 1st to talk through our goals. We use this very simple template. Steal it and get it done with your family by the end of January. I do my own review separately, but this one is simple enough of an exercise to do in an hour. End with a hug.

Things I learned in 2025 - from J. Dillard (must read) Also: 26 best reports for 2026

The surprising truth about the generations that suffer loneliness the most - experts say the modern world is to blame.

How Google Got Its Groove Back and Edged Ahead of OpenAI - After ChatGPT dominated early chatbot market, Google staged comeback with powerful AI model; biggest search-engine overhaul in years

Not For Human Consumption - Grey market peptides have built a parallel pharmaceutical infrastructure under the thinnest regulatory pretense. The data reveals a cultural contradiction that extends far beyond weight loss.

Next

Don’t Outsource Your Thinking - I keep saying this to everyone. This piece tells you part of the reason.

Consumer AI predictions - 2 minute read, worth thinking through

Tech to Track in 2026 Grid-scale storage, tumor-busting ultrasound, and more cutting-edge projects are bubbling up this year

2025: The year in LLMs - must read

Why AI Boosts Creativity for Some Employees but Not Others - (The HBR research that sparked this week’s essay.) Generative AI is increasingly embedded into day-to-day workflows across organizations globally. Employees are using AI tools like ChatGPT to brainstorm solutions, explore alternatives, summarize information, and accelerate projects. As these tools become more capable, many organizations hope they will spark higher levels of creativity, enabling employees to generate more novel and impactful ideas.

If you enjoyed this edition of Box of Amazing, please forward it to a friend or share the link on WhatsApp or LinkedIn.